Introduction

In today’s digital world, the line between reality and fabrication is becoming increasingly blurred—and much of it is due to the rise of fake news and deepfakes. As a tech blogger, I’ve observed how artificial intelligence has quietly transformed the way information is created, consumed, and, unfortunately, manipulated. What once required a skilled video editor or a deceptive writer can now be done with a few clicks, thanks to advanced AI tools.

Fake news refers to deliberately false or misleading information, often designed to sway public opinion, damage reputations, or simply go viral. Deepfakes take this a step further. These AI-generated videos or audio clips can convincingly mimic real people saying or doing things they never actually did. Together, fake news and deepfakes have become powerful tools in the wrong hands—capable of influencing elections, spreading misinformation during crises, or harming individuals’ credibility in a matter of minutes.

What makes this trend even more concerning is how accessible and realistic these AI tools have become. From free deepfake apps to AI-generated news articles, the technology is not just evolving—it’s exploding in reach and capability.

But it’s not all doom and gloom. The same AI technologies are also being developed to detect and combat these threats. As we explore the role of AI in this space, it’s essential to understand both its creative power and its potential for misuse.

This blog will dive deeper into how fake news and deepfakes are created, how they spread, and what’s being done to stop them—so you can stay informed and protected in the age of artificial intelligence.

What is Fake News? How AI Plays a Role

Fake news is any intentionally false or misleading information that’s presented as legitimate news. It often spreads quickly through social media, messaging apps, and unreliable websites—triggering emotional responses, shaping opinions, and even influencing political or social outcomes. But what’s alarming today is how AI has made the creation and distribution of fake news faster, smarter, and harder to detect.

Earlier, fake news relied on clickbait headlines and manually written content. Now, AI tools like large language models can generate entire articles in seconds that sound convincingly human. These AI-generated texts are often filled with biased narratives, exaggerated claims, or entirely fabricated stories—making them seem authentic while being completely untrue. The goal is usually to manipulate public opinion or drive traffic through sensationalism.

AI also plays a major role in how fake news is spread. Algorithms on social platforms are designed to prioritize content that gets the most engagement. Unfortunately, false information often attracts more clicks, shares, and comments than verified news. AI-based recommendation engines unintentionally amplify this problem by showing users more of what they already believe, creating echo chambers where fake news thrives.

What’s more, AI can now clone writing styles, mimic voices, and create realistic-looking images to support these stories. This makes the line between fact and fiction even harder to draw.

Understanding the link between fake news and deepfakes is critical in this era of information overload. While AI has made it easier to create deceptive content, it’s also being used to develop countermeasures—like fact-checking tools, content authenticity systems, and AI-based detection algorithms. As consumers of digital content, being aware of these tools and the risks they combat is the first step in staying informed and responsible online.

What are Deepfakes? Understanding the Technology Behind Them

Deepfakes are AI-generated videos, images, or audio recordings that convincingly mimic real people doing or saying things they never actually did. The term “deepfake” is a blend of “deep learning” and “fake,” highlighting the role of artificial intelligence in crafting these hyper-realistic forgeries. What makes deepfakes particularly dangerous is how believable they can be—often indistinguishable from genuine content to the average viewer.

At the core of deepfakes is a type of machine learning called deep learning, which relies on neural networks that can process massive amounts of data. One of the most commonly used technologies for generating deepfakes is the Generative Adversarial Network (GAN). A GAN consists of two competing neural networks: one generates fake content (the generator), and the other tries to detect whether it’s fake (the discriminator). Over time, this back-and-forth makes the output more realistic with each iteration.

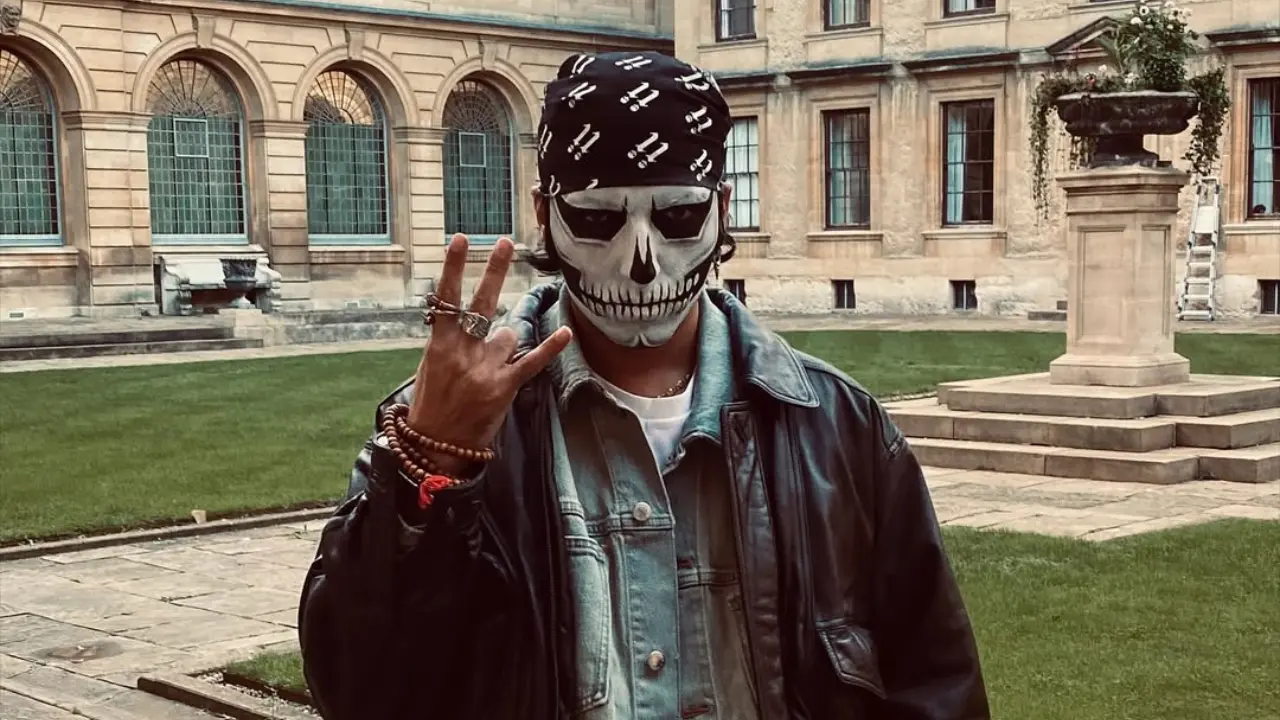

To create a deepfake video, the AI is trained on countless images or video frames of the target person from different angles, lighting conditions, and facial expressions. It learns patterns in facial movements, voice inflections, and mannerisms. Once trained, the AI can overlay someone’s face onto another person’s body or clone their voice in real-time or prerecorded content. One of the most commonly used technologies for generating deepfakes is the Generative Adversarial Network (GAN).

The impact of fake news and deepfakes becomes especially dangerous when these tools are used together. For example, a deepfake video of a politician making a false statement can be embedded into a fake news article, spreading misinformation with a shocking level of authenticity.

As the technology becomes more advanced and accessible, deepfakes are no longer confined to tech labs—they’re now tools available to anyone with basic skills and the right software. This raises serious concerns about privacy, trust, and digital integrity in today’s hyperconnected world.

How AI Helps Create Fake Content

Artificial intelligence has revolutionized content creation—and not always for the better. While AI brings innovation to industries like marketing, design, and automation, it also enables the creation of highly deceptive content with alarming ease. When it comes to fake news and deepfakes, AI plays a central role in automating and scaling the spread of misinformation.

Text-based fake news, for instance, can now be generated using advanced AI language models that mimic human writing. These tools can produce full-length articles, social media posts, and even fabricated interviews that appear legitimate. With just a few keywords or prompts, AI can write persuasive headlines, biased narratives, or misleading stories tailored to target audiences. This makes it easy for bad actors to flood the internet with false information in a matter of minutes.

In the visual realm, AI-powered tools like GANs (Generative Adversarial Networks) are used to create deepfake videos and synthetic images. These tools require a dataset of real photos or videos to train the model, which then learns how to generate fake visuals that closely resemble real ones. From impersonating celebrities to faking confessions or news reports, the potential misuse is vast and deeply concerning.

Audio deepfakes are another growing threat. AI models trained on voice data can replicate a person’s speech patterns to create realistic fake audio clips. These clips can be used in scams, fake news segments, or social engineering attacks—making it difficult for listeners to trust what they hear.

The combination of AI’s creative power and the speed of digital platforms has turned fake content into a growing weapon of influence. While some of this technology has genuine, positive applications, its misuse continues to challenge the authenticity of what we read, watch, and hear in the age of fake news and deepfakes.

AI Tools Commonly Used for Generating Deepfakes

The creation of deepfakes has become surprisingly accessible, thanks to a growing number of AI tools and platforms designed to generate realistic fake videos, images, and audio. While many of these tools were initially developed for entertainment, research, or artistic purposes, they are now frequently misused in the spread of fake news and deepfakes.

One of the most well-known tools is DeepFaceLab, an open-source deepfake creation software used to swap faces in videos with impressive realism. It’s widely used by hobbyists and professionals alike due to its high-quality output and customization options. Similarly, FaceSwap offers a user-friendly interface for face replacement tasks, making it easier for beginners to get started with deepfake creation.

Zao, a mobile app developed in China, went viral for its ability to insert users’ faces into movie clips within seconds. Its simplicity shows how AI-powered deepfake tools have become as easy to use as social media filters. Other mobile apps like Reface and Wombo also use AI to animate faces or swap them in photos and videos, often for comedic or viral content.

In the audio domain, tools like Descript’s Overdub and iSpeech allow users to clone voices using a small sample of someone’s speech. These voice clones can then be used to generate fake phone calls, interviews, or statements—contributing to the broader threat of fake news and deepfakes.

For developers and researchers, platforms like Runway ML and D-ID offer powerful machine learning tools capable of generating synthetic media on demand, with features like face animation and text-to-video capabilities.

While many of these tools are marketed for legitimate creative uses, their misuse highlights the urgent need for ethical guidelines, public awareness, and detection technologies to prevent the harmful impact of AI-generated deepfakes.

The Dangers of AI-Generated Fake News and Deepfakes

AI-generated fake news and deepfakes pose a serious threat to truth, trust, and safety in the digital age. What was once science fiction has now become a real-world challenge, where fabricated content created by artificial intelligence can manipulate public opinion, damage reputations, and even threaten national security.

One of the most immediate dangers is misinformation during crises. Deepfake videos or fake news articles can quickly spread false details during elections, pandemics, or natural disasters—misleading the public when accurate information is most critical. For example, a deepfake of a world leader announcing a fake military decision could cause mass panic or geopolitical tension within minutes.

Another growing concern is reputation damage and personal harm. AI tools can be used to create fake videos or voice recordings of celebrities, politicians, or everyday individuals saying or doing things they never did. Once such content goes viral, it becomes difficult to undo the harm—even if it’s later proven false.

In the context of social media, echo chambers and algorithm-driven platforms amplify the reach of fake content. AI doesn’t just create misinformation—it also helps spread it. Content that provokes strong emotions often gets more engagement, and unfortunately, fake news tends to be more sensational than the truth.

Financial fraud is also on the rise, with scammers using AI-generated voice deepfakes to impersonate CEOs or family members and trick victims into transferring money. This form of deception is harder to detect than traditional scams, making it more dangerous.

Ultimately, the spread of fake news and deepfakes undermines public trust in digital content. When people begin to question everything they see or hear, even genuine information loses credibility. This growing uncertainty is one of the biggest threats posed by AI-generated fake content today.

Real-World Examples of AI Being Misused

As powerful as artificial intelligence is, its misuse has already led to several disturbing real-world incidents. From political manipulation to personal attacks, the harmful use of AI—especially in the context of fake news and deepfakes—has shown just how easily truth can be distorted in the digital age.

One of the most well-known cases occurred in 2018, when a deepfake video of former U.S. President Barack Obama was released by a research group. While it was created to raise awareness about deepfake technology, the video convincingly showed Obama saying things he never actually said. It demonstrated how easily AI could be used to put false words into the mouths of world leaders—potentially leading to political unrest or diplomatic crises.

In another case, a UK-based energy company lost $243,000 in a scam where criminals used AI-generated voice technology to impersonate the CEO of the parent company. The fraudsters convinced an employee to transfer funds urgently, believing the voice on the phone was real. This real-life example shows how AI-generated audio deepfakes are now being used for financial crimes.

In India, deepfake videos have also surfaced in political campaigns. A notable incident involved a political candidate whose face and voice were manipulated to deliver a speech in a different language to appeal to a broader audience. Though it was intended for outreach, critics raised concerns about transparency and the potential for misleading voters.

There are also many instances where deepfake adult content has been created using images of celebrities or private individuals—causing serious emotional and reputational damage. Most of these videos are distributed without consent, raising ethical and legal concerns around AI-generated media.

These examples reflect the growing dangers of fake news and deepfakes in real-world scenarios. As the technology continues to evolve, so does the urgency to regulate and counter its misuse.

How AI is Also Fighting Fake News

While artificial intelligence is often blamed for creating fake content, it’s also becoming one of the most powerful tools in the fight against fake news and deepfakes. Just as AI can generate false information, it can also be trained to detect, flag, and filter it—making it a critical ally in the battle for digital truth.

AI-powered fact-checking tools are one of the first lines of defense. Platforms like Google and Facebook use machine learning algorithms to scan articles, posts, and videos for misleading or inconsistent information. These systems cross-reference content with trusted sources and flag potentially false claims for review. In many cases, users are shown warnings or fact-check labels before they can share questionable content.

Natural language processing (NLP) models are also being used to analyze the tone, structure, and credibility of news articles. By understanding the language patterns commonly found in fake news, AI can classify content based on its likelihood of being false. These tools help journalists, researchers, and platforms identify misinformation at scale—far beyond what human moderators alone could handle.

In the world of deepfakes, AI is being trained to detect digital fingerprints that are invisible to the human eye. Deepfake detection tools analyze inconsistencies in lighting, facial movements, eye blinking, and voice modulation to spot signs of manipulation. Companies like Microsoft and startups such as Sensity AI are developing advanced models that can automatically detect altered videos and images before they go viral.

AI is also helping develop content verification systems, including blockchain-based digital watermarks that prove a photo or video’s origin. These technologies aim to restore trust in authentic media and reduce the spread of fake content online.

Though it may seem ironic, the same AI that fuels the spread of fake news and deepfakes is also being engineered to stop them—proving that how we use the technology matters more than the technology itself.

Laws and Regulations Around AI-Generated Fake Content

As the threat of fake news and deepfakes grows, governments and tech regulators around the world are starting to take action. The misuse of artificial intelligence to create misleading or harmful content has raised serious legal and ethical concerns—forcing lawmakers to respond with new rules, policies, and penalties. The EU Artificial Intelligence Act is one of the first attempts to legally classify and regulate the risks of AI tools, including those that generate fake content.

In the United States, several states have already passed legislation targeting deepfakes. For example, California and Texas have laws that make it illegal to publish malicious deepfakes close to an election or use them to harm someone’s reputation. These laws aim to prevent AI-generated videos or audio clips from influencing public opinion or damaging individuals during political campaigns.

India, too, is working on comprehensive frameworks to regulate AI content. While specific laws around deepfakes are still in development, the Information Technology Rules (2021) already place some responsibility on social media platforms to detect and remove false or harmful content, including AI-generated media. The proposed Digital India Act may go even further in addressing deepfake misuse and AI regulation.

At the global level, the European Union’s AI Act is among the first major efforts to classify and regulate AI systems based on their potential risk. It includes provisions that require transparency for synthetic content—like labeling deepfake videos—and imposes strict rules on high-risk AI applications, including those used to manipulate public opinion.

In addition to government efforts, social media platforms are also setting their own rules. Meta, YouTube, and X (formerly Twitter) have introduced policies to label or remove manipulated media, especially when it could cause real-world harm.

Despite these steps, enforcing laws around fake news and deepfakes remains a challenge. The technology is evolving rapidly, and legal systems often struggle to keep pace. Still, growing awareness and international cooperation are pushing forward much-needed regulation to ensure AI is used ethically and responsibly.

Can You Detect a Deepfake? Tools and Techniques

With deepfakes becoming more realistic and easier to produce, one of the most pressing questions is: can you actually detect them? The answer is yes—but it’s getting harder. Fortunately, both AI-powered tools and manual techniques are being developed to help identify fake news and deepfakes before they cause harm.

On the surface, there are some visual and audio cues that can help spot a deepfake. Look for unnatural blinking, mismatched lighting, odd facial expressions, or lip-sync issues. Often, deepfakes fail to capture fine details like subtle eye movement or realistic skin texture under different lighting. In audio deepfakes, glitches in speech patterns, robotic tone, or missing emotional cues can be warning signs.

However, as deepfake technology improves, manual detection is no longer enough. That’s where advanced AI tools come in.

Deepware Scanner and Sensity AI are platforms specifically designed to detect deepfake videos. They use machine learning to analyze videos frame by frame, looking for signs of manipulation that humans might miss. These tools are used by journalists, cybersecurity experts, and content moderators to filter out deceptive media.

Microsoft Video Authenticator is another notable tool. It assigns a confidence score to a video, indicating how likely it is to be fake. Similarly, FaceForensics++, an open dataset and benchmark tool, helps researchers train models to recognize forged facial footage.

Beyond video, tools like Resemble AI Detector and DeFake are helping analyze voice clips and social media content for deepfake elements, enhancing the fight against audio-based deception.

While detection tools are getting smarter, the best defense remains awareness. Understanding how fake news and deepfakes spread, and learning how to spot them, empowers users to think critically before believing or sharing any digital content.

The Future: How Can We Use AI Responsibly?

As artificial intelligence continues to evolve, its power is undeniable—but so is its potential for misuse. The rise of fake news and deepfakes has shown us how easily AI can blur the lines between truth and illusion. Looking ahead, the challenge isn’t just about improving the technology—it’s about using it responsibly.

Responsible AI starts with transparency. Developers and companies creating AI systems should clearly disclose when content is AI-generated. Whether it’s a chatbot conversation, a synthetic video, or an AI-written article, users deserve to know what’s real and what’s not. Labeling AI content and watermarks can help preserve trust in digital media.

Ethical guidelines and industry standards are also critical. Tech firms must build safety features into their AI tools, such as detection systems that flag potential misuse. This includes limiting access to advanced deepfake generators, verifying users, and disabling harmful use cases. Collaboration between governments, researchers, and private companies is essential to establish shared norms and accountability.

Public education and digital literacy play a big role too. As deepfake detection tools improve, people must also be trained to recognize signs of manipulated content and verify sources before sharing information. Encouraging a culture of critical thinking is just as important as technical solutions.

At a broader level, regulation and governance will shape how AI is used in society. Clear laws on misinformation, data privacy, and AI-generated content can help curb abuse while still allowing innovation to thrive.

AI isn’t inherently good or bad—it’s a tool. The future depends on how we choose to use it. With the right balance of innovation, ethics, and awareness, we can harness AI’s potential while minimizing its risks, especially in the ongoing battle against fake news and deepfakes.

Conclusion

The rapid rise of artificial intelligence has brought both remarkable innovation and serious challenges. Among the most concerning developments are the growing threats posed by fake news and deepfakes. What once took hours or days to fabricate can now be produced in minutes using powerful AI tools—blurring the line between fact and fiction like never before.

From manipulating public opinion to damaging reputations and spreading false information during crises, the misuse of AI has real-world consequences. Deepfakes, in particular, have shown how realistic and dangerous synthetic content can become when placed in the wrong hands. Yet, despite these risks, AI also offers solutions—through detection tools, fact-checking systems, and digital forensics that help fight back against misinformation.

The key lies in responsible development, regulation, and user awareness. Technology will keep advancing, but how we use and govern it will determine its impact. By staying informed, questioning what we see and hear, and supporting ethical AI practices, we can reduce the harm caused by fake news and deepfakes and build a more trustworthy digital future.

Also Read: Why Time Slows Down at the Speed of Light 2025.

FAQs

Q1. What are fake news and deepfakes?

Fake news refers to deliberately false or misleading information presented as news, while deepfakes are AI-generated videos or audio clips that mimic real people’s appearances or voices to spread misinformation.

Q2. How are deepfakes created?

Deepfakes are created using artificial intelligence, especially deep learning models like Generative Adversarial Networks (GANs). These models are trained on large datasets to replicate facial movements, voices, and gestures.

Q3. Can fake news and deepfakes be detected?

Yes, AI-powered tools like Microsoft Video Authenticator, Sensity AI, and Deepware Scanner can help detect fake content. Visual inconsistencies, unnatural speech, and digital watermarks are common signs of manipulation.

Q4. Are there any laws against deepfakes?

Yes, some countries and states have started to regulate deepfakes. For example, the U.S. has state laws restricting their use during elections, and the EU’s AI Act proposes strict regulations on synthetic media.

Q5. Is AI only harmful when it comes to fake news?

Not at all. While AI can be misused to spread misinformation, it’s also being used to detect and prevent it. AI-driven fact-checking tools and content authentication technologies are critical in the fight against fake news.

Q6. How can I protect myself from fake content online?

Always verify the source of information, avoid sharing unverified news, use fact-checking websites, and stay informed about common signs of deepfakes. Digital literacy is your best defense.

Q7. Why are fake news and deepfakes so dangerous?

They can influence public opinion, interfere with elections, cause reputational harm, and erode trust in digital content. Their realistic nature makes it harder for people to separate truth from fiction.